As AI applications move from experimental prototypes to production environments, organizations face significant challenges ensuring reliability, visibility, and performance. Traditional monitoring tools fall short when dealing with complex multi-agent systems, leaving companies unable to effectively debug or improve their AI implementations. HoneyHive provides an AI agent observability and evaluation platform that enables organizations to systematically test, evaluate, and monitor AI agents throughout their entire lifecycle. Built on OpenTelemetry standards, the platform offers comprehensive visibility into AI systems, showing not just outputs but the entire decision journey—revealing how conclusions were reached, where systems falter, and what patterns lead to failures. By closing the loop between production incidents and development, HoneyHive creates a complete system of record that versions all traces, prompts, tools, datasets, and evaluations, enabling enterprises to deploy AI with confidence and achieve real ROI from their investments.

AlleyWatch sat down with HoneyHive CEO and Founder Mohak Sharma to learn more about the company’s launch, business, its future plans, and recent funding round.

Who were your investors and how much did you raise?

We raised a total of $7.4M in Seed funding, consisting of a $5.5M Seed round led by Insight Partners and our previously unannounced $1.9M Pre-Seed round led by Zero Prime Ventures. Our Seed round saw participation from Zero Prime Ventures, 468 Capital, and MVP Ventures, while our Pre-Seed included AIX Ventures, Firestreak Ventures, and notable angel investors like Jordan Tigani (CEO at Motherduck) and Savin Goyal (CTO at Outerbounds). We’re particularly excited to welcome George Mathew, Managing Director at Insight Partners, to our board of directors.

Tell us about the product or service that HoneyHive offers.

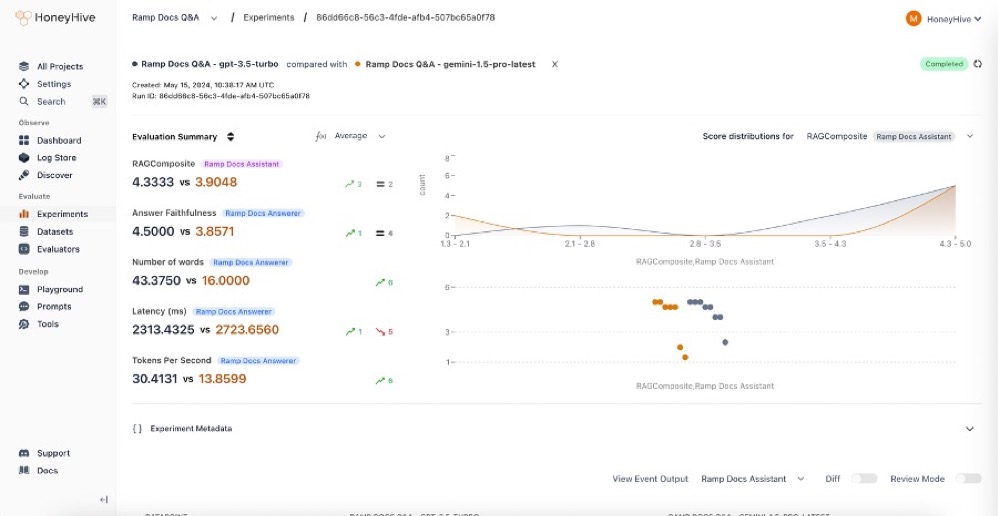

At HoneyHive, we provide an AI agent observability and evaluation platform that helps organizations build reliable AI-powered products. Our platform enables teams to systematically evaluate AI agents throughout the entire lifecycle – from initial development through production deployment. Built on OpenTelemetry standards, we offer comprehensive visibility into AI systems, showing not just outputs but how conclusions were reached, where systems get stuck, and what patterns lead to failures. We close the loop between production incidents and development by capturing failure scenarios, creating test cases, and ensuring future iterations don’t repeat mistakes. Our platform serves as a complete system of record that versions all traces, prompts, tools, datasets, and evaluators throughout the AI development lifecycle.

What inspired the start of HoneyHive? Dhruv (Druv Singh, Cofounder) and I met as roommates at Columbia University where we often discussed starting a company together. After graduation, we gained experience in different areas of AI and data science. I worked at Templafy, building out data and AI platforms, while Dhruv worked at Microsoft, including on the OpenAI Innovation Team within the Office of the CTO that was responsible for primarily collaborating with OpenAI. Our experiences revealed a significant gap between AI prototypes and production-ready systems. We observed teams building promising AI applications that would break in unexpected ways when deployed, with no proper tools to understand what was happening or how to systematically improve. This fundamental challenge inspired us to create HoneyHive – a new “DevOps stack” for AI focusing especially on agentic workflows and complex, multi-step logic.

Dhruv (Druv Singh, Cofounder) and I met as roommates at Columbia University where we often discussed starting a company together. After graduation, we gained experience in different areas of AI and data science. I worked at Templafy, building out data and AI platforms, while Dhruv worked at Microsoft, including on the OpenAI Innovation Team within the Office of the CTO that was responsible for primarily collaborating with OpenAI. Our experiences revealed a significant gap between AI prototypes and production-ready systems. We observed teams building promising AI applications that would break in unexpected ways when deployed, with no proper tools to understand what was happening or how to systematically improve. This fundamental challenge inspired us to create HoneyHive – a new “DevOps stack” for AI focusing especially on agentic workflows and complex, multi-step logic.

How is HoneyHive different?

HoneyHive differentiates itself in several ways:

- It bridges the gap between development and production environments, allowing teams to catch regressions and failures quickly, then systematically fix them.

- It’s designed specifically for multi-step AI pipelines and agentic workflows, tracking each step as a first-class entity with custom metrics and checks.

- Unlike traditional software observability tools, HoneyHive is built to handle huge volumes of AI “events,” including multi-megabyte spans from large context windows.

- It’s truly enterprise-ready, offering various deployment options including standard SaaS, single-tenant SaaS, and on-premises deployments in customers’ own VPCs behind their firewalls.

- It’s built on OpenTelemetry standards to integrate with existing infrastructure rather than replacing it.

The platform creates a collaborative system of record for AI development, ensuring full auditability and traceability.

What market does HoneyHive target and how big is it?

We target organizations building and deploying AI applications, ranging from small startups to Fortune 100 enterprises. Initially, we focused on smaller AI startups, but by 2024, we expanded to serve Fortune 100 insurance companies and banks looking to deploy generative AI in production with reliability and compliance. Our platform is particularly valuable for teams building complex AI pipelines with multi-step logic, retrieval from vector databases, and agentic workflows. The enterprise AI market is substantial and growing rapidly as more companies move from experimental AI projects to production deployments seeking real ROI.

We expect our total TAM to be $36.2B but given AI is still in its nascent stage, the real market opportunity is likely larger.

What’s your business model?

We offer usage-based pricing model with different deployment options for enterprises, including standard SaaS, single-tenant SaaS, and on-premises deployments. We’ve designed our pricing structure to be accessible for both startups and Fortune 100 companies since it’s based off of usage, i.e. volume of telemetry logged from your AI agents.

How are you preparing for a potential economic slowdown?

We’re focused on building a sustainable business that delivers clear ROI for our customers. By helping companies deploy AI that actually works in production, we’re directly tied to their ability to generate value from AI investments, which remains a priority even in challenging economic times since AI is often looked upon as a way to reduce operating expenditure. We’re also maintaining extreme capital efficiency while we grow and ensuring we have multiple years of runway to weather any market conditions.

What was the funding process like?

Our funding process was driven by strong product-market fit and customer validation. We were fortunate to connect with investors who deeply understood the AI space and recognized the critical need for evaluation and observability as AI moves into production environments. Our Seed round came together in less than 3 weeks with multiple term-sheets as investors saw the traction we were gaining with early customers, particularly within the Fortune 500.

What are the biggest challenges that you faced while raising capital?

The biggest challenge was conveying the technical complexity of what we’re solving in a way that was accessible to investors. AI observability isn’t as straightforward as traditional software monitoring, especially when dealing with multi-step agent workflows and the probabilistic nature of large language models. We needed to help investors understand why existing DevOps tools fall short for AI and why our approach offers a fundamentally better solution.

What factors about your business led your investors to write the check?

I believe several factors influenced our investors’ decisions. First, our strong growth trajectory – we saw over 50x increase in requests logged in 2024 alone. Second, our ability to secure enterprise customers early, including Fortune 100 companies. Third, our technical approach of leveraging traces for evaluations and monitoring multi-agent architectures, which also spoke to our team’s deep technical expertise and execution capabilities in a rapidly evolving space. Our team consists of seasoned engineers from companies like Microsoft, Amazon, JP Morgan, Patreon, and others which provided further validation.

What are the milestones you plan to achieve in the next six months?

Over the next six months, we’re focused on enabling enterprise-wide AI deployment through expanded integrations and deployment models, including supporting various new enterprise pilots in pipeline. We’re building advanced evaluation tooling for running multi-turn agent simulations, making sure complex agents like Devin can be thoroughly tested using a technology similar to the likes of Waymo and other self-driving companies. We’re also accelerating our product development to meet growing market demand and expanding our team in key areas like product engineering, systems infrastructure, and developer relations.

What advice can you offer companies in New York that do not have a fresh injection of capital in the bank?

Focus relentlessly on customer value and build something people truly want. When we started HoneyHive, we spent countless hours talking to AI engineers and teams to deeply understand their pain points. This customer-centric approach helped us build something people actually wanted, which in turn made fundraising easier. In today’s environment, capital efficiency is crucial – prioritize what truly moves the needle for customers and be willing to adapt quickly based on their feedback.

Where do you see the company going in the near term?

In the near term, we’re focused on scaling our product and team following our general availability launch. We’re particularly focused on advancing our evaluation capabilities for emerging agent architectures, expanding our observability features, and deepening our enterprise integration options. Our mission is to help more organizations bridge the gap between AI prototypes and reliable production systems, and this funding will accelerate our ability to deliver on that mission.

What’s your favorite spring destination in and around the city?

When I can find time away from building HoneyHive, I love visiting Jones Beach State Park in spring and summer. The beach is spectacular, surprisingly close to the city, and it’s a perfect place to clear your head and gain some perspective when you’re deep in the startup grind.