A Braille feature that turns an iPhone into a notetaker, a new Reading Mode that makes it easier to read for people with a range of disabilities like dyslexia or low vision, and Live Captions on the Apple Watch—these are among the accessibility features Apple will roll out later this year. Cupertino made the announcement ahead of Global Accessibility Awareness Day on May 15.

Apple is bringing as many as 20 new accessibility features to its core products, including the iPhone, iPad, Mac, Apple Watch, and Vision Pro. Take Magnifier, for example—a tool that allows people who are blind or have low vision to zoom in, read text, and detect objects around them using the iPhone or iPad. That feature is now coming to the Mac.

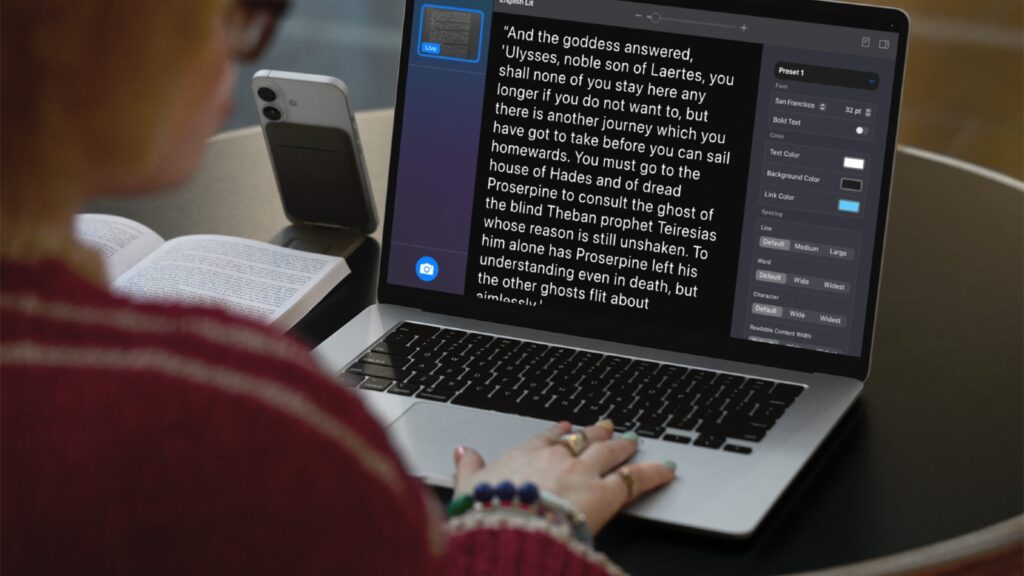

iPhone will soon gain a new accessibility reader. (Image credit: Apple)

iPhone will soon gain a new accessibility reader. (Image credit: Apple)

Magnifier for Mac connects to the user’s camera—whether it’s an iPhone using Continuity Camera or a USB camera—allowing them to zoom in on anything and view it on the Mac’s larger display. The feature could be a game-changer for people with visual impairments.

Meanwhile, Apple is adding a new section in the product pages of apps and games that will highlight accessibility features, making it easy for users if the app or games have accessibility features before you download them. Those features include VoiceOver, Voice Control, Larger Text, Sufficient Contrast, Reduced Motion and captions, as well as others.

Accessibility Nutrition Labels will be available worldwide on the App Store. Developers will have access to guidance on what criteria apps need to meet before showing accessibility information on their product pages.

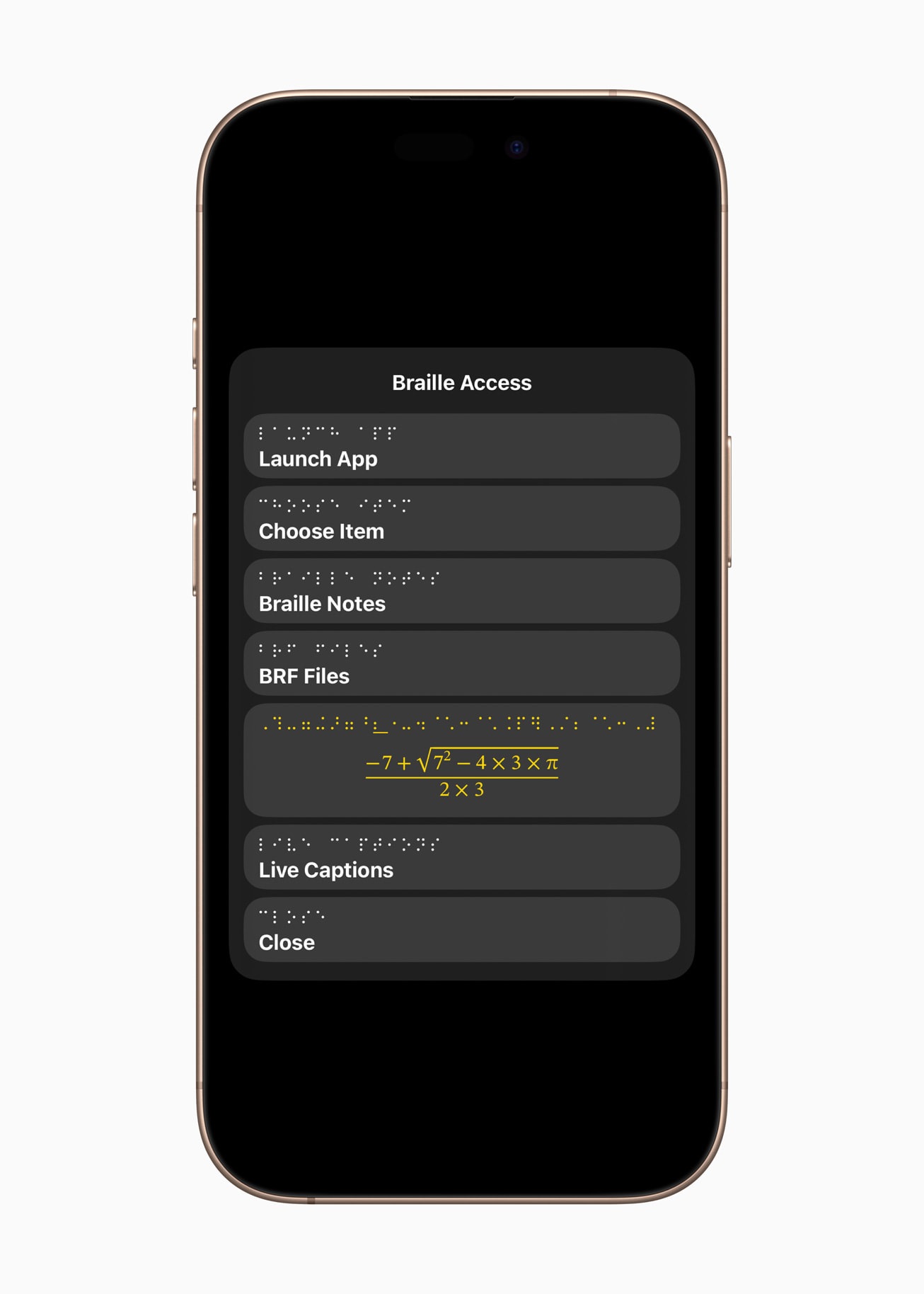

Apple will offer a new Braille experience across devices. (Image credit: Apple)

Apple will offer a new Braille experience across devices. (Image credit: Apple)

Perhaps the most important—and truly remarkable—feature is Braille Access, which basically turns the iPhone, iPad, Mac, or Vision Pro into a braille notetaker. Users can launch any app by typing with Braille Screen Input or a connected braille device, jot down notes in braille format, and perform calculations using Nemeth Braille. They can also open Braille Ready Format files directly within Braille Access, making it possible to access books and documents created on a braille notetaking device. In addition, Live Captions integration allows conversations to be transcribed directly onto braille displays.

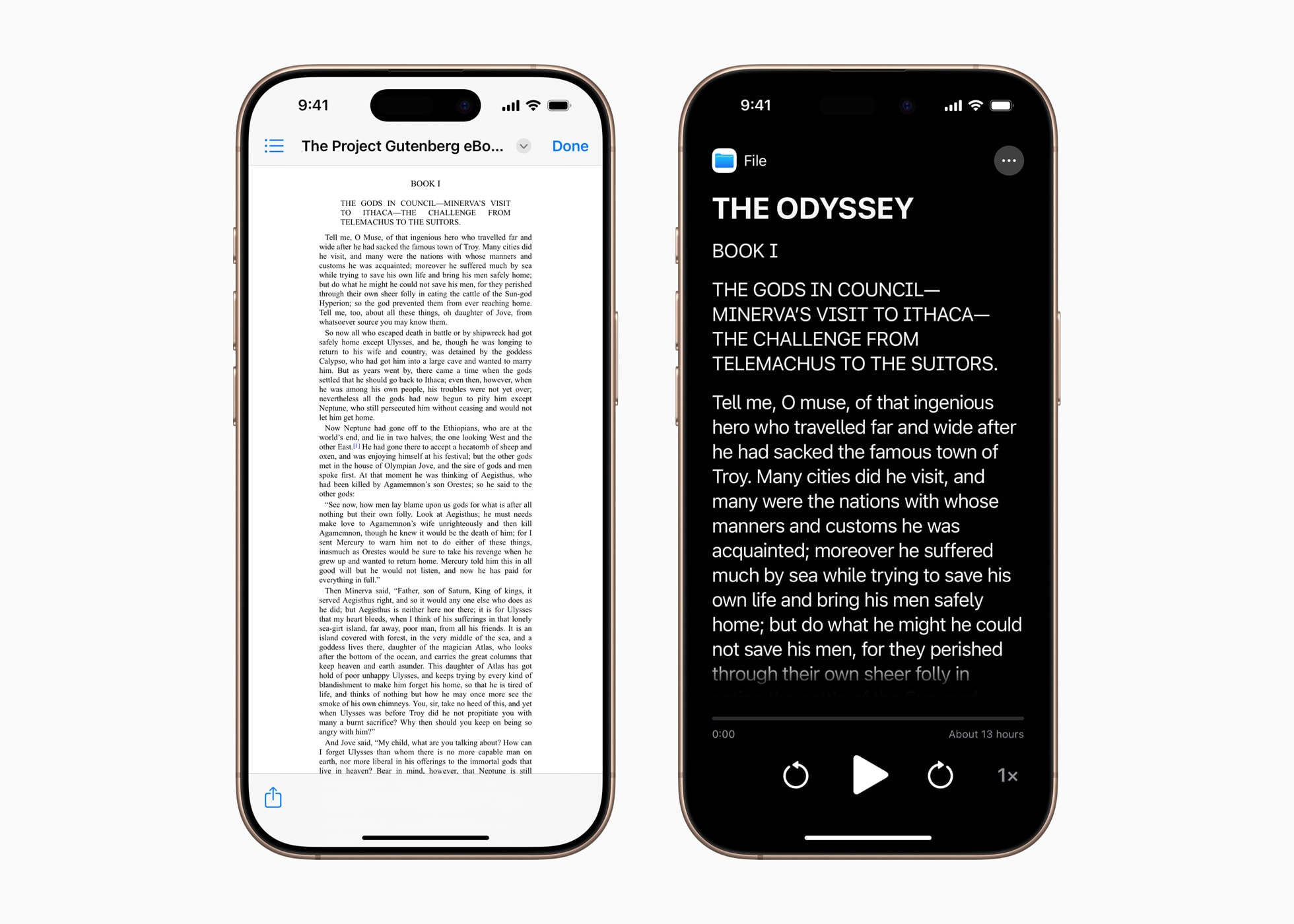

The long list of accessibility features also includes Accessibility Reader, a new reading mode available on iPhone, iPad, Mac, and Apple Vision Pro, which is designed to make text easier to read for people wight disabilities, such as dyslexia or low vision. Apple is also bringing Live Listen controls to the Apple Watch, now with new features including real-time Live Captions. Another addition is Vehicle Motion Cues on Mac, which can help reduce motion sickness when looking at a screen while in motion .Meanwhile, Personal Voice—which allows people at risk of speech loss to create a voice that sounds like them using AI and on-device machine learning—is now faster and easier to set up. Instead of reading 150 phrases, the process has been streamlined for quicker use.

Story continues below this ad

Apple is also bringing its accessibility tech to its Vision Pro, turning its mixed-reality headset into a proxy for eyesight. With the new update, which takes advantage of the headset’s main camera, users can use the Vision Pro to magnify anything in view, including the surroundings.

iPhone and Watch users will able to gain a new feature called live listen with real-time captions. (Image credit: Apple)

iPhone and Watch users will able to gain a new feature called live listen with real-time captions. (Image credit: Apple)

The company said it is also adding a new protocol in visionOS, iOS, and iPadOS to support brain-computer interfaces (BCIs) through its Switch Control accessibility feature. This feature enables alternate input methods, such as controlling aspects of your device using head movements captured by the iPhone’s camera.

A report by The Wall Street Journal explains that Apple developed this new BCI standard in collaboration with Synchron, a brain implant company whose technology allows users to select icons on a screen simply by thinking about them. Synchron makes an implant called the Stentrode, which is placed in a vein near the brain’s motor cortex. Once implanted, the Stentrode can read brain signals and translate them into movement on devices, including iPhones, iPads, and Apple’s Vision Pro headset.

‘

And while Apple may have plans to release its standards, it could take years for the technology developed by Synchron and Elon Musk’s brain-interface company, Neuralink, to reach mainstream status.

Accessibility in tech has always garnered attention, but at times, it’s been limited to conversations. However, over the past few years, Apple and other tech companies have incorporated accessibility features as a foundational part of the products we use every day, rather than treating them as an afterthought. This shift shows that accessibility in tech truly matters—and that designing with accessibility by default is the right way forward. Critics say it’s a major win for accessible technology, but there is still a long road ahead before the entire tech industry fully support accessibility.