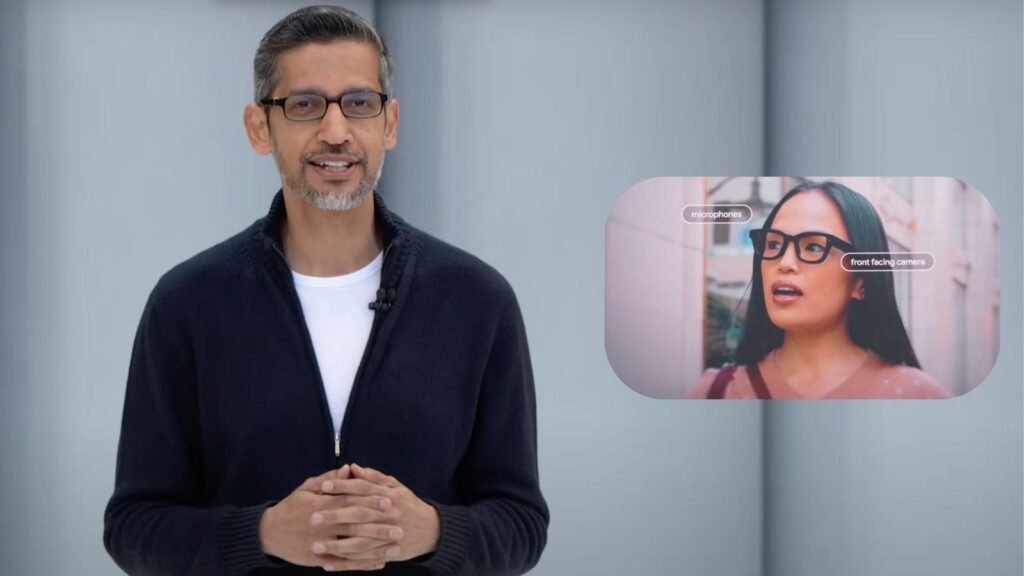

Google now brings GenAI to more people than any other product, with over 400 million monthly users for Gemini products, Sundar Pichai, CEO, Google and Alphabet, claimed on the sidelines of the Google I/O conference in California. “More intelligence is available for everyone, everywhere. And the world is responding, adopting AI faster than ever before…,” he said, adding that the company has released over a dozen foundation models since the last edition of the conference.

As expected, Google’s annual conference this time is focused on AI, with the search and advertising giant showcasing a lot of new products and updates. “There’s a hard trade-off between price and performance. Yet, we’ll be able to deliver the best models at the most effective price point… We are in a new phase of AI product shift,” Pichai said in his opening keynote.

Sir Demis Hassabis, Nobel Prize winner for Chemistry as well as CEO and co-founder of Google DeepMind, announced an updated version of Gemini 2.5 Flash. Highlighting DeepMind’s vision for the future, he said AI has to be useful in everyday life by being intelligent and understanding the context you are in and being able to plan and take action on your behalf. “This is our ultimate vision for Gemini… to transform it into a universal AI system, an AI that is personal, proactive and powerful, and one of our key milestones on the road to AGI.”

One highlight is Google Beam, an AI-first video communication platform that has evolved from Project Starline, which Pichai said brings the world closer to having a “natural free-flowing conversation across languages”. Beam instantly translates spoken languages in “real-time, preserving the quality, nuance, and personality in their voices”.’

Google also showcased VEO 3, its updated video generation platform with native audio generation capabilities too, and Imagen 4 for upscaled image generation. Taking its creative capabilities to a new level is Flow, an AI filmmaking tool custom-designed for the latest Veo and Imagen with Gemini. “Flow can help storytellers explore their ideas without bounds and create cinematic clips and scenes for their stories,” with the ability to use camera controls, a scene builder and asset management.

There are significant changes in Google Search also, with end-to-end AI Mode in Search launching in the US this week with the ability to ingest long prompts directly into search. “AI mode is where we will bring our first frontier capabilities into search,” Pichai said. There is also shopping in AI Mode and agentic checkout and a virtual try-on tool working directly on the user’s photos.

“Under the hood, AI Mode uses our query fan-out technique, breaking down your question into subtopics and issuing a multitude of queries simultaneously on your behalf. This enables Search to dive deeper into the web than a traditional search on Google, helping you discover even more of what the web has to offer and find incredible, hyper-relevant content that matches your question,” explained Liz Reid, VP, head of Google Search, calling AI mode a glimpse of what is to come. “You can bring your hardest questions right to the search box.”

Story continues below this ad

Meanwhile, Hema Budaraju, vice president, product management, search, said AI Overviews in Google Search is now available in 40 new languages, including Urdu. “With this expansion, AI Overviews are now available in more than 200 countries.’ Pichai said AI overviews are driving 10% growth in search with over 1.5 billion users now.

In what was almost a footnote, Google announced Android XR, a new platform made for the Gemini era and one that will usher in new hardware like headsets. Samsung’s Project Moohan will be the first Android XR project and available for purchase later this year. Google Glasses with Android XR also made a debut at the keynote, 10 years after its predecessor was announced at I/O. However, there is no launch date for the Glasses, the most exciting product showcased at the event.

The author is in California on the invite of Google.